Blog Archive

The architecture and design behind any web stack is key to scalability, and at ROBLOX, we aim to provide simple solutions to complex problems. Today, Vice President of Web Engineering & Operations, Keith V. Lucas, and Technical Director of Web Architecture and Scaling, Matt Dusek, explain the scale out ROBLOX has done and what motivated these changes.

The architecture and design behind any web stack is key to scalability, and at ROBLOX, we aim to provide simple solutions to complex problems. Today, Vice President of Web Engineering & Operations, Keith V. Lucas, and Technical Director of Web Architecture and Scaling, Matt Dusek, explain the scale out ROBLOX has done and what motivated these changes.

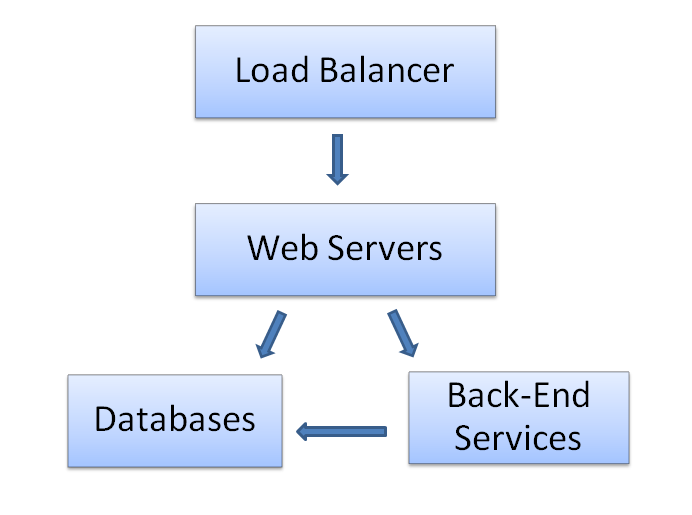

The ROBLOX web stack has evolved considerably since it began. We started with a traditional two-tiered architecture plus some back-end services, which looks like this:

The design was simple, and it supported very rapid development cycles. We discussed more sophisticated enterprise architectures at the time, but rejected them in favor of fast development. Sometimes we kick ourselves now, but only a little bit – ROBLOX has grown in part because of the speed and nimbleness of our engineering team.

The design was simple, and it supported very rapid development cycles. We discussed more sophisticated enterprise architectures at the time, but rejected them in favor of fast development. Sometimes we kick ourselves now, but only a little bit – ROBLOX has grown in part because of the speed and nimbleness of our engineering team.

The original design eventually broke due to increases in the following:

- number of inbound requests

- number of engineers (aka number of changesets / week)

- number of things we want to know

- number of client devices talking to back-end services

and to decreases in:

- tolerance for site disruptions

- tolerance for potential data loss risks

- infrastructure costs per online user

In terms of scale, we are now operating at roughly 75,000 database requests per second, 30,000 web-farm requests per second, and over 1 PB per month of CDN traffic. Our content store is responsible for roughly 1% of all the objects stored in the Amazon S3 cloud. Currently, we have about 200 pieces of equipment at PEER1, and many game servers distributed throughout North America.

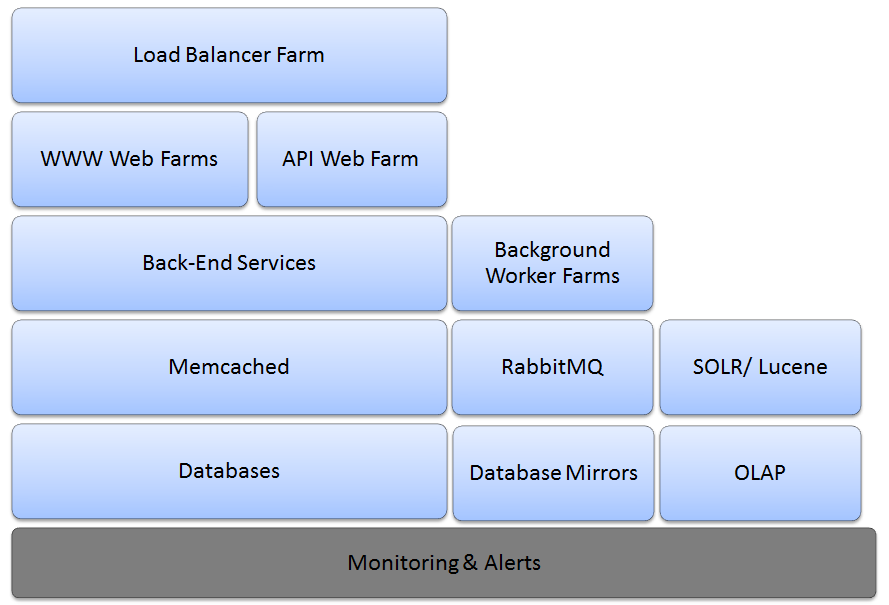

Here is what our system looks like today:

Here is a list of the scale-out that we’ve done, roughly in chronological order, along with the driving factors behind the changes.

| Change | Driving Factors |

| Horizontally Scaled Out Web and Database Farms | Load |

| Monitoring & Alerts | Predict, avoid, or minimize site disruptions Increase our understanding of the entire system to identify bottlenecks and failure points, both before and after issues. |

| Memcached | Cost — shift load from expensive databases, to cheaper memcached, then scale out memcached |

| Background Worker Farm | Quality of Service + Cost — migrate all processing away from web servers that deliver real time content to background processors, allowing web servers to operate more efficiently and consistently (i.e. no cyclical processing “hiccups”). |

| Provisioning Models | Load + Cost — have enough servers to keep up with growth, but not too many to keep costs low; Our provisioning models have been essential to both. |

| Split WWW Farm into Multiple Smaller Farms | Quality of Service + Cost — our servers fall into a few different performance patterns based on load; Splitting them into dedicated farms not only allows them to perform more consistently (less variance in all key metrics), but it also allows us to better predict how many servers we really need. |

| SOLR / Lucene | Load — our original full-text database search could not keep up with user load, content growth, or our feature wish list; SOLR/Lucene is a core element of our search & discovery. |

| API Service Farm | Multiple Client Devices + Engineering Team Scale — our API service farm is composed of individually developed, deployed, and run components, all accessible to the website, the ROBLOX client, and our iPhone App (more to come). |

| OLAP | Business Intelligence — “deep dive” queries didn’t last long on production servers. |

| Database Mirrors | Disaster Recovery — early on, we developed a low-cost system to automatically backup, ship, and restore all of our databases every few minutes; We have since moved to real-time mirroring. |

| Horizontally Scaled Out our Load Balancing | Load — we currently have an active-active load balancing mesh that distributes incoming load across multiple devices, giving us both high availability and horizontal scale-out. |

| RabbitMQ | Load + Quality of Service — as we start interacting with our newer cloud services at Amazon, we’re initially using RabbitMQ to protect our internal systems against the intermittent high latencies of our remote systems; We’ll soon be expanding this throughout our back-end to decouple our sub-systems more effectively. |

Finally, as we’ve scaled out our infrastructure, we’ve organized around a few themes:

Build Narrowly-Scoped, Composable Components

We do not shy away from complex systems, especially when that’s what’s required to do the job. However, we strive for simple solutions to complex problems. Component design follows good coding practices: compose sophisticated behavior from simple, easy-to-understand constituent pieces. More and more of our user-facing features are the result of interactions between very simple and fast individual services. Our in-RAM datastore backing our presence service easily processes tens of thousands of requests per second with minimal CPU overhead (under 10%) — because that’s all it does.

We Are a ROBUX Bank

When it comes to both real and virtual currency transactions, ROBLOX is a bank: transactions must be auditable, responses must be immediate, and failures must be handled deterministically and deliberately. When we work on these features, we behave a bit more like an old-school software shop in both process and design. Our billing systems have more failsafe’s, checks, and audited queues than any other system. They are also subject to more design reviews, more deliberation, more testing, and more sophisticated roll out strategies. Our virtual economy has an extensive audit trail that allows us to trace money and transactions with impressive fidelity; we can, for example, track the distribution of a single ROBUX grant (e.g. received through a currency purchase) as it travels via user-to-user transactions to hundreds and even thousands of users.

We Are Not a Bank

Other than real and virtual currency transactions, we are not a bank. We shun over-designed solutions, always opting for low-friction, incremental changes to our product and system. We rapidly iterate, most often succeeding, sometimes failing, and always rapidly correcting. We live by the principle that live code getting real-world feedback is infinitely better than code living on someone’s laptop. We’ve moved to daily web application releases, and our high-scalability services teams releases component by component ahead of the final feature roll out.

Get the Interface “Right”, Iterate the Implementation

Despite our “not a bank” highly iterative approach, we do draw a (dotted but darkening) line when it comes to defining what a component is and how other components interact with it. That’s because changing the interface to components is much harder than changing what’s under the hood. Given a few days to deliver a new service, our priorities are: (a) surviving the load, and (b) getting the interface right. We’ll gladly deploy a new service that has an inefficient (but liveable) implementation, knowing we can iterate on that implementation without any other team or component knowing. Sometimes that is easier said than done.

Nonetheless, ROBLOX’s architecture allows for high scalability with fast development cycles, and our engineering team continues to develop a more sophisticated enterprise design, as we scale out.